LCF

Python网络爬虫工程师

- 公司信息:

- 腾讯

- 工作经验:

- 1年

- 兼职日薪:

- 500元/8小时

- 兼职时间:

- 可工作日驻场(自由职业原因)

- 所在区域:

- 上海

- 浦东

技术能力

是爬虫知识,如何发起request,如何处理响应,如何存储数据,各种反爬虫措施应对。

了解scrapy,分布式爬虫,爬虫性能优化,大规模数据爬取,代理,app爬取等等。

熟悉多线程编程、网络编程、HTTP协议相关

开发过完整爬虫项目

反爬相关,cookie、ip池、验证码等等

熟练使用分布式

项目经验

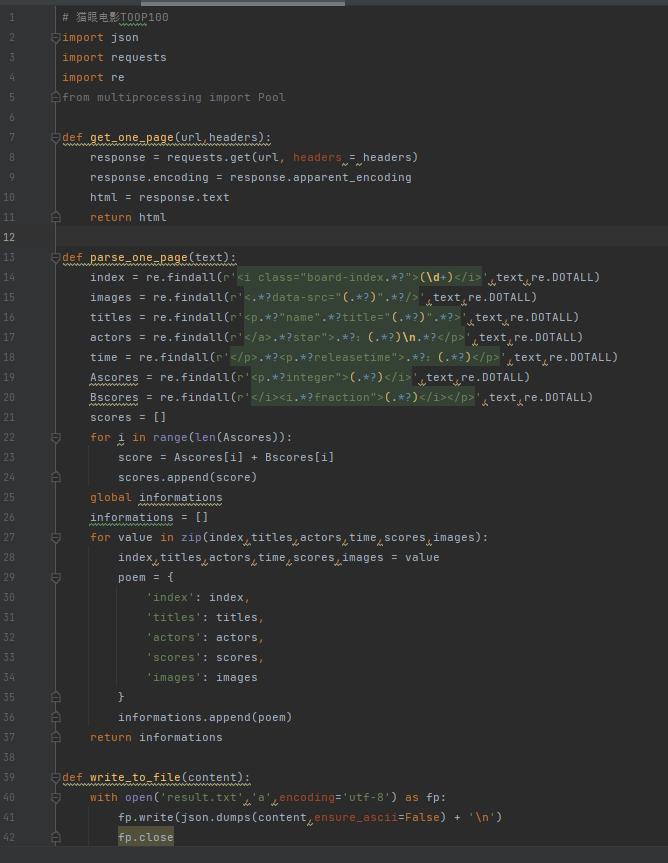

import json

import requests

import re

from multiprocessing import Pool

def get_one_page(url,headers):

response = requests.get(url, headers = headers)

response.encoding = response.apparent_encoding

html = response.text

return html

def parse_one_page(text):

index = re.findall(r'<i class="board-index.*?">(\d+)</i>',text,re.DOTALL)

images = re.findall(r'<.*?data-src="(.*?)".*?/>',text,re.DOTALL)

titles = re.findall(r'<p.*?"name".*?title="(.*?)".*?>',text,re.DOTALL)

actors = re.findall(r'</a>.*?star">.*?:(.*?)\n.*?</p>',text,re.DOTALL)

time = re.findall(r'</p>.*?<p.*?releasetime">.*?:(.*?)</p>',text,re.DOTALL)

Ascores = re.findall(r'<p.*?integer">(.*?)</i>',text,re.DOTALL)

Bscores = re.findall(r'</i><i.*?fraction">(.*?)</i></p>',text,re.DOTALL)

scores = []

for i in range(len(Ascores)):

score = Ascores[i] + Bscores[i]

scores.append(score)

global informations

informations = []

for value in zip(index,titles,actors,time,scores,images):

index,titles,actors,time,scores,images = value

poem = {

'index': index,

'titles': titles,

'actors': actors,

'scores': scores,

'images': images

}

informations.append(poem)

return informations

def write_to_file(content):

with open('result.txt','a',encoding='utf-8') as fp:

fp.write(json.dumps(content,ensure_ascii=False) + '\n')

fp.close

def main(offset):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)'

' Chrome/92.0.4515.107 Safari/537.37',

'Connection':'keep-alive',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image

案例展示

-

猫眼电影TOP100

主要涉及一门语言的爬虫库、html解析、内容存储等,复杂的还需要了解URL排重、模拟登录、验证码识别、多线程、代理、移动端抓取等

-

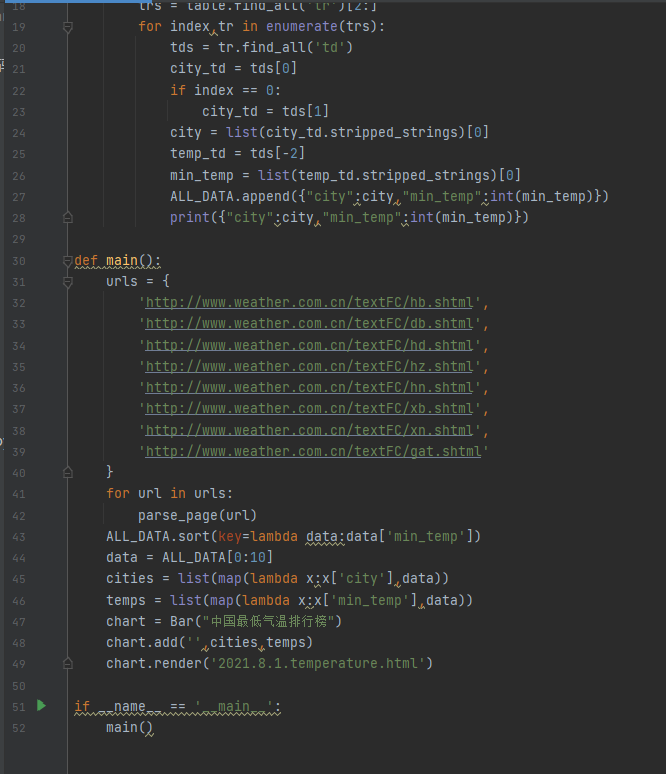

中国天气网数据爬取

爬虫知识,主要涉及一门语言的爬虫库、html解析、内容存储等,复杂的还需要了解URL排重、模拟登录、验证码识别、多线程、代理、移动端抓取

相似人才推荐

-

500元/天Python开发上海驻云信息科技有限公司概要:1、熟练使用python进行开发 2、熟练使用flask框架 3、熟悉Script和Django

-

500元/天高级python开发工程师中国联通总部软件研究院概要:1、前端技术熟练使用React技术快速搭建前端框架。 2、前端小程序熟练使用colorUI技术快速

-

600元/天项目研发总监,python后端工程师(高级)上海宝临电气集团;灵锐信科;弈慧投资概要:管理相关: 需求沟通与分析分解(Mindmanager),项目原型图制作(Axure),与甲方共同

-

500元/天中级python开发工程师软通概要:熟练使用Python语言进行应用程序开发; 熟练使用Django框架进行Web应用后端开发,熟悉M

-

500元/天python爬虫贵艺公司概要:可以熟练使用python爬虫,js解密,按键精灵安卓端的自动化,和autojs自动化,熟练使用mit

500元/天python爬虫贵艺公司概要:可以熟练使用python爬虫,js解密,按键精灵安卓端的自动化,和autojs自动化,熟练使用mit -

600元/天python开发工程师抖音火山概要:主要使用 Python、JavaScript、Go,了解 Java、C++等编程语言 了解数据库原

-

800元/天高级JAVA/PYTHON研发工程师天津三星电子有限公司概要:Python: 擅长使用Django,Flask框架开发Web项目。 熟悉Linux环境和命令,

-

500元/天Java,python 研发工程师oppo研究院概要:进行独立设计的能力 熟练使用Spring及SpringMVC开发 熟练使用常用持久层框架My