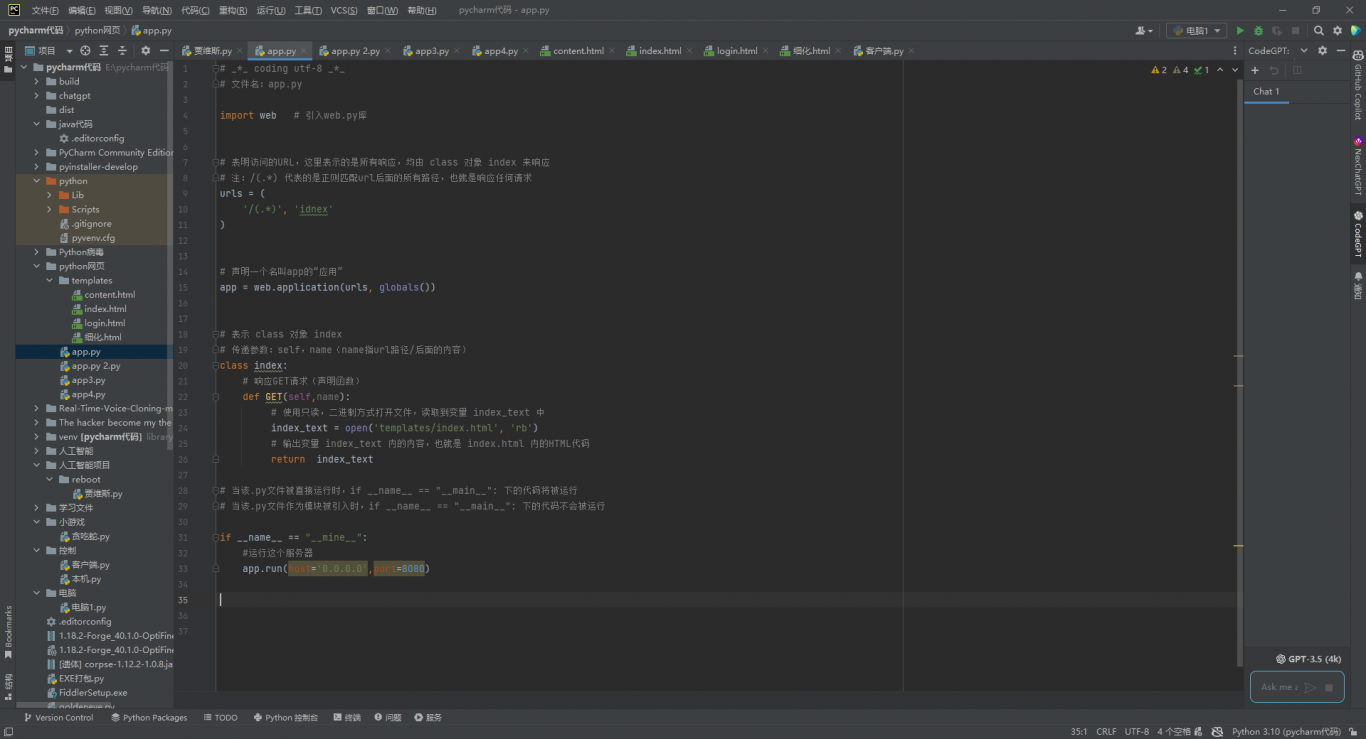

python网页制作

案例介绍

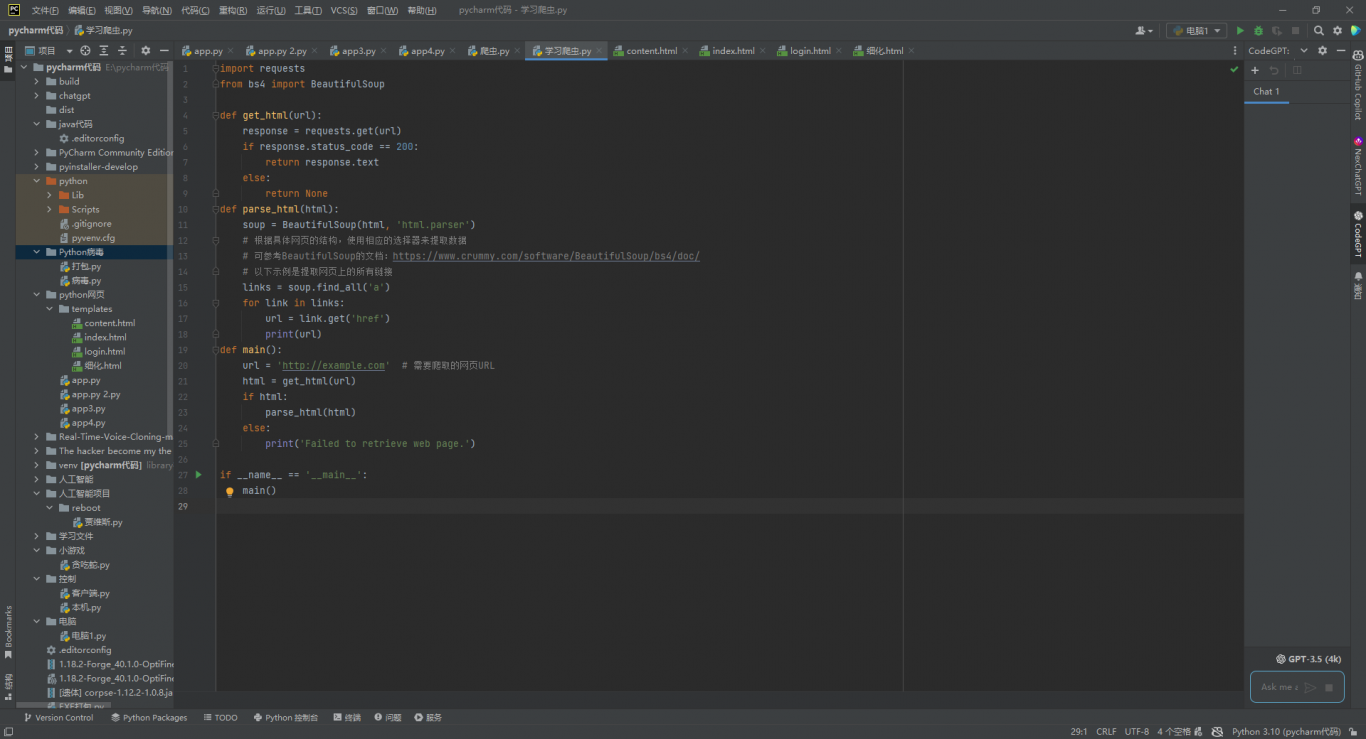

import requests

from bs4 import BeautifulSoup

def get_html(url):

response = requests.get(url)

if response.status_code == 200:

return response.text

else:

return None

def parse_html(html):

soup = BeautifulSoup(html, 'html.parser')

# 根据具体网页的结构,使用相应的选择器来提取数据

# 可参考BeautifulSoup的文档:https://www.crummy.com/software/BeautifulSoup/bs4/doc/

# 以下示例是提取网页上的所有链接

links = soup.find_all('a')

for link in links:

url = link.get('href')

print(url)

def main():

url = 'http://example.com' # 需要爬取的网页URL

html = get_html(url)

if html:

parse_html(html)

else:

print('Failed to retrieve web page.')

if __name__ == '__main__':

main()

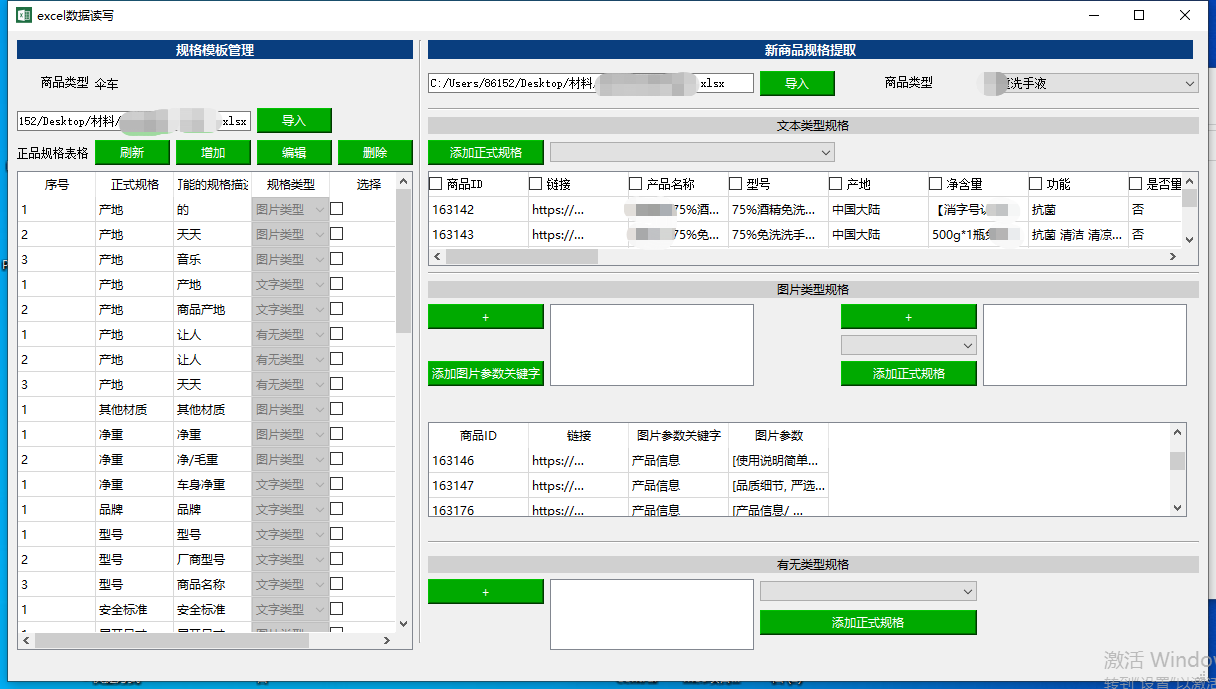

案例图片