案例介绍

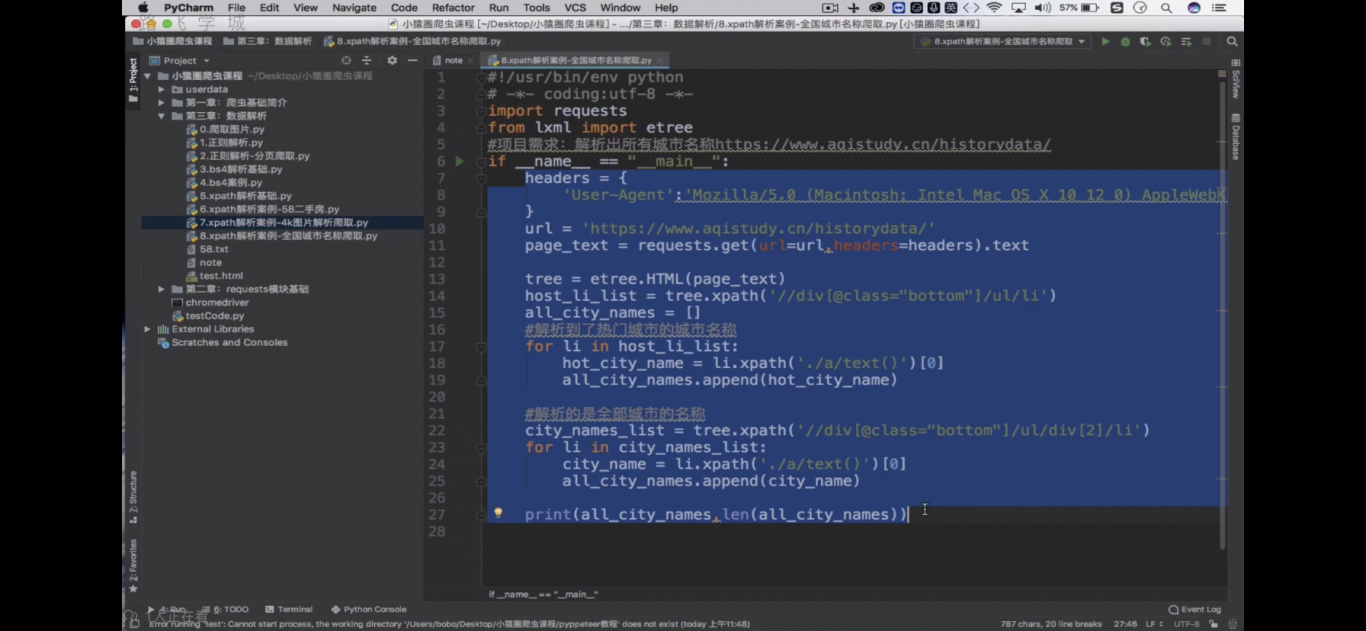

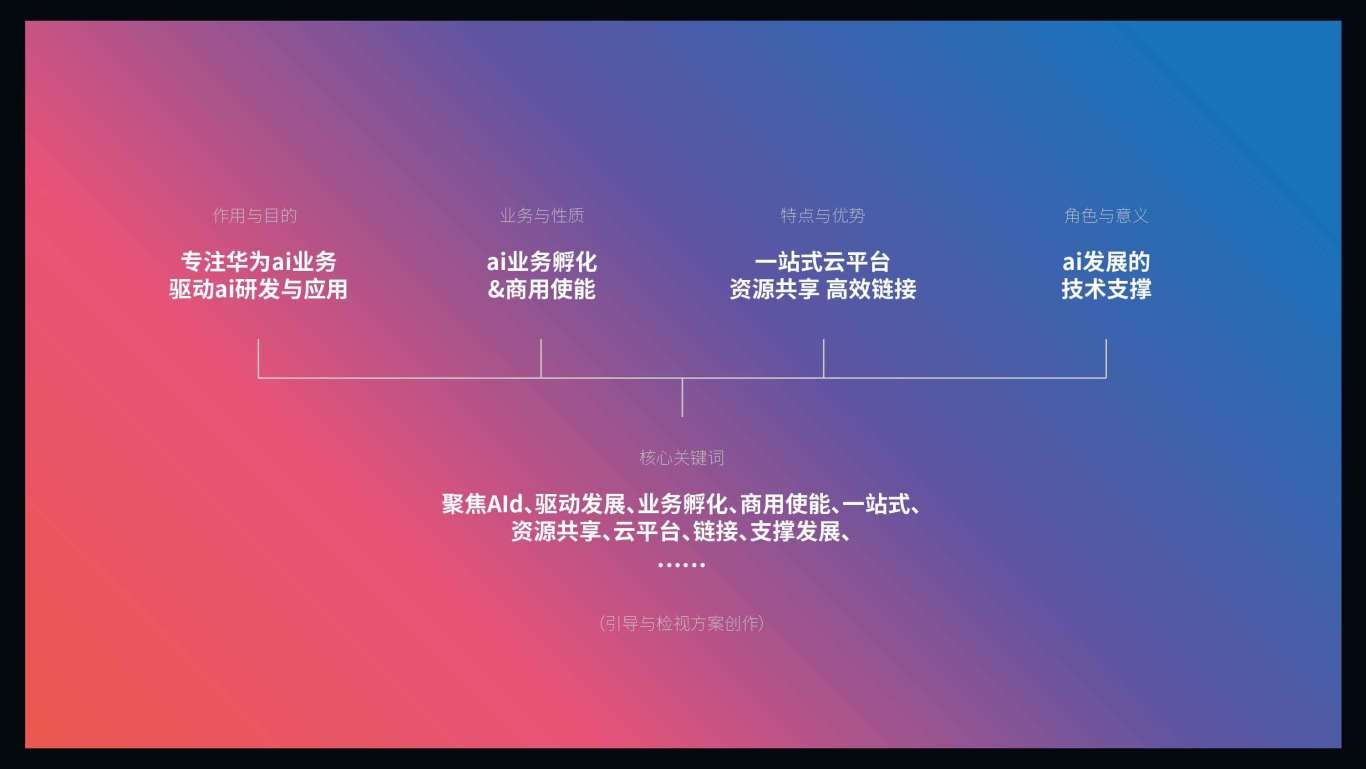

In the field of computer vision, it is a challenging task to generate natural language captions from videos as input. To deal with this task, videos are usually regarded as feature sequences and input into Long-Short Term Memory (LSTM) to generate natural language. To get richer and more detailed video content representation, a Multimodal Interaction Video Captioning Network

based on Semantic Association Graph (MIVCN) is developed towards this task. This network consists of two modules: Semantic association Graph Module (SAGM) and Multimodal Attention Constraint Module (MACM).

The proposed MIVCN realizes the best caption generation performance on MSVD: 56.8%, 36.4%, and 79.1% on BLEU@4, METEOR, and ROUGE-L evaluation metrics, respectively. Superior results are also reported on MSR-VTT about BLEU@4, METEOR, and ROUGE-L compared to state-of-the-art methods.

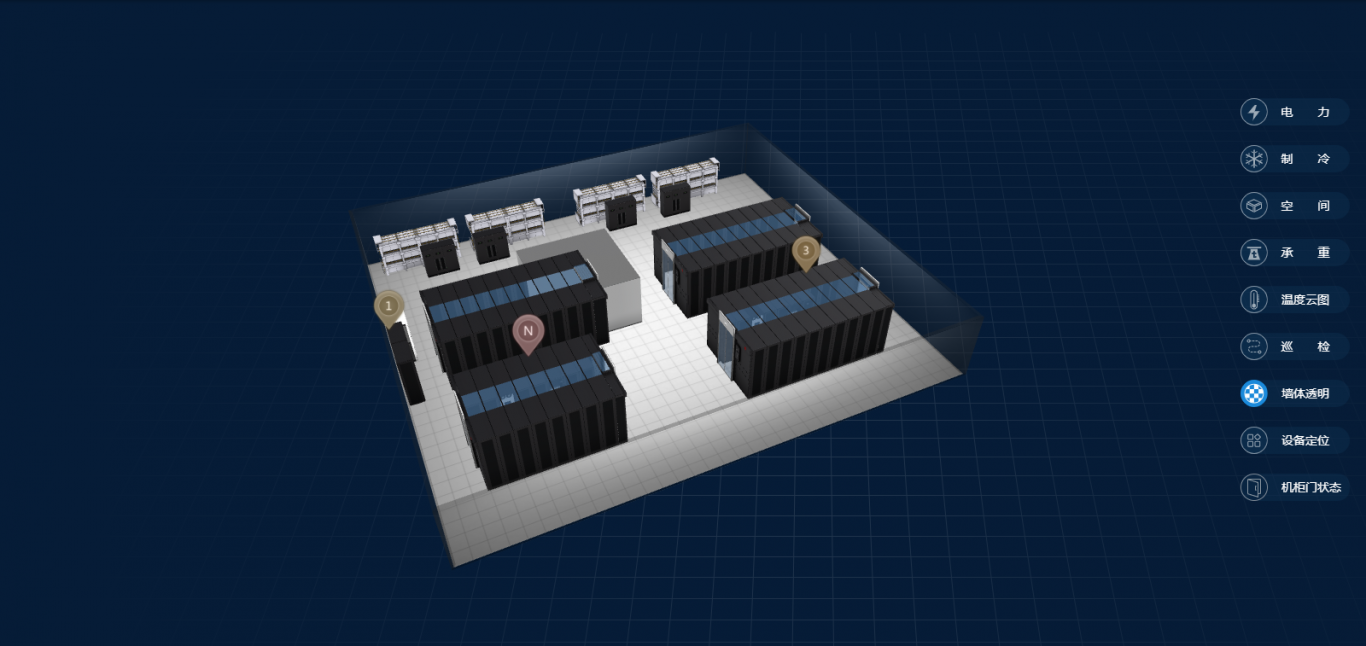

案例图片