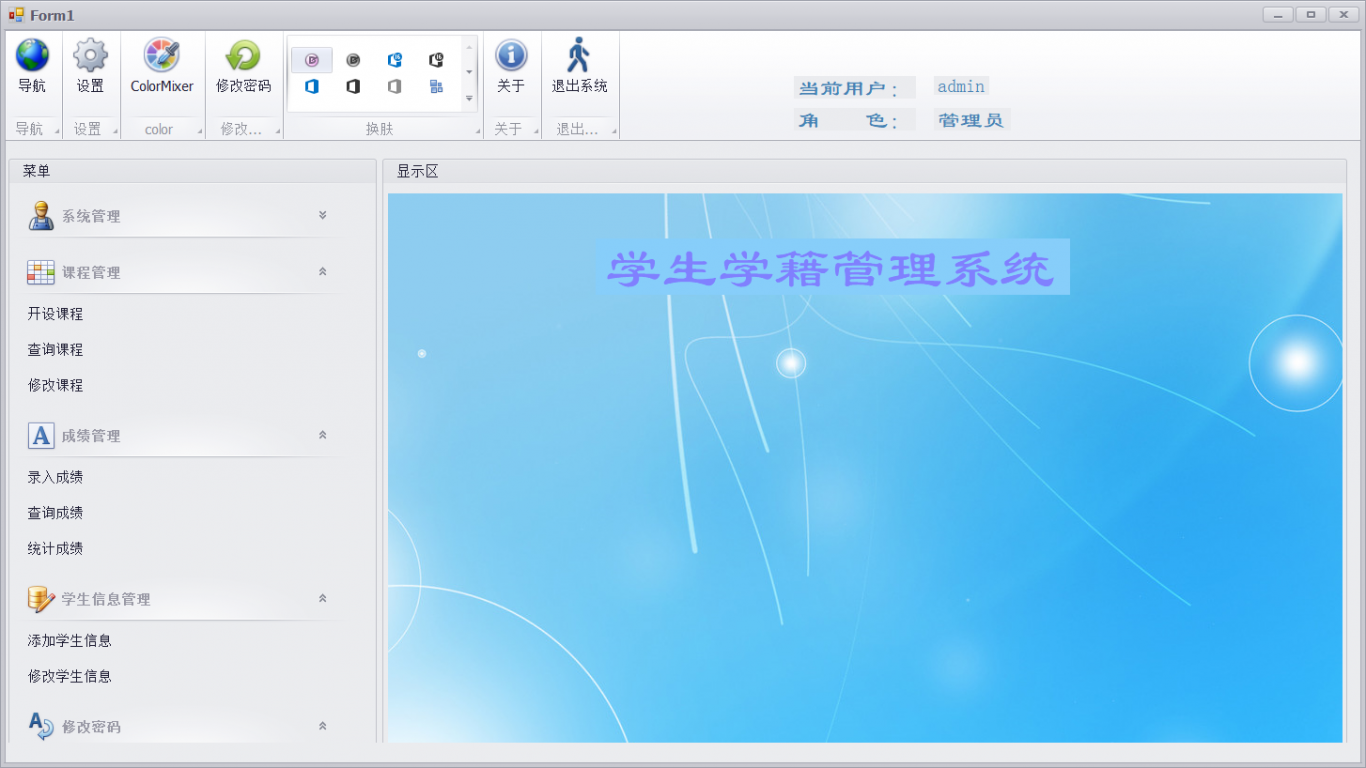

Python爬去千千小说网并批量下载

案例介绍

from fake_useragent import UserAgent

import requests

from lxml import etree

def takeurl():

headers={

'User-Agent':UserAgent().random

}

url='https://www.qqxsnew.com/95/95819/'

nodes=etree.HTML(requests.get(url,headers=headers).content)

data =nodes.xpath("/html/body/div[@id='wrapper']/div[@id='main']/div[@class='box_con'][2]/div[@id='list']/dl/dd[position()>12]")

for i in data:

title=i.xpath('./a/text()')[0]

newurl = 'https://www.qqxsnew.com'+i.xpath('./a/@href')[0]

print(title,newurl)

result=requests.get(newurl).content

response=etree.HTML(result).xpath("/html/body/div[@id='wrapper']/div[@id='main']/div[@class='content_read']/div[@class='box_con']/div[@id='content']/text()")

for x in response:

print(x)

path = "J:\study\小说\\" + title + '.text'

with open(path, 'a', encoding='UTF-8')as f:

f.write(x + '\n')

print("正在下载", title)

takeurl()

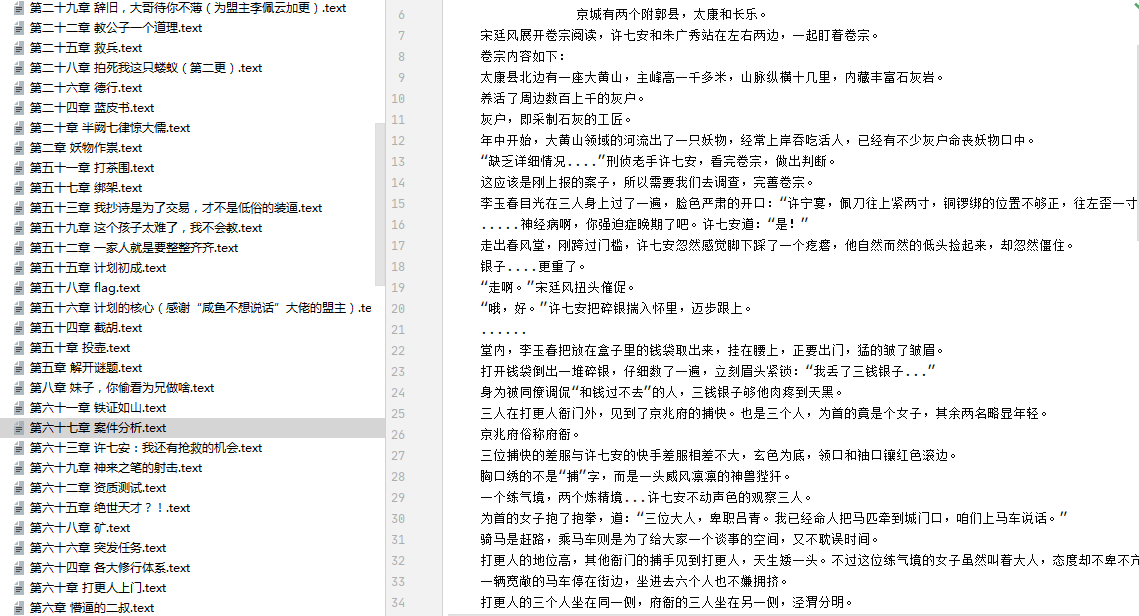

案例图片